Graphing of ilcs in AIX is not included in the nimon data output because ilcs comes from the mpstat command and ilcs is not part of the perfstat library that njmon uses.

ilcs is “Involuntary Logical CPU switches” and occurs when the LPAR is running on processor time in the shared pool, but is suddenly kicked off the processor due to contention. This seriously hurts performance.

The script below will run mpstat -d in the background and send the data to the ‘mpstat’ database and ‘mpstat’ measurements table. You will need to adopt this to your environment.

Run as ‘python mpstat.py 5 17420’ to get 5 second data points for 24h.

from influxdb import InfluxDBClient # For InfluxDB connection

import subprocess # For background process piping

import re # For regexp matching

import sys # For reading command line arguments

import socket # For gethostname function

"""

Program name : mpstat.py

Syntax : mpstat.py {interval} {count}

Author : Henrik Morsing

Date : 09/10/2023

Description : Script starts mpstat in the background and

Sends ilcs data to the "mpstat" database for Grafana,

in the "mpstat" measurements.

It sends data with the tag "cswitch=ilcs" and

parameter "switches".

The interval parameter is in seconds.

"""

host=socket.gethostname()

server=<server>

# Load command arguments

count = sys.argv[2]

interval = sys.argv[1] # In seconds

# Define the connection string

conn = InfluxDBClient(host=server, port='8086', username='user', password='password123')

# Define function to run and return mpstat values continuously

def mpstat(interval, count):

"""

This function calls mpstat to pick out ilcs values.

It then returns them via 'yield', which enables the function

to continue listening, and returning, mpstat output.

"""

# Define process handle

popen = subprocess.Popen(["/usr/bin/mpstat", "-d", str(interval), str(count)], stdout=subprocess.PIPE, universal_newlines=True)

# Now we can read the output lines from the mpstat function.

for line in iter(popen.stdout.readline, ""):

match = re.search("ALL", line) # "match" becomes true if line contains the word "ALL"

if match:

list = line.split() # Split the string line into a list of words

ilcs = list[15] # Field 15 in mpstat output is ilcs

yield ilcs # Return ilcs

popen.stdout.close()

for ilcs in mpstat(interval, count):

line = 'mpstat,host=%s,cswitch=ilcs switches=%s' % (host,ilcs)

conn.write([line], {'db': 'mpstat'}, 204, 'line')

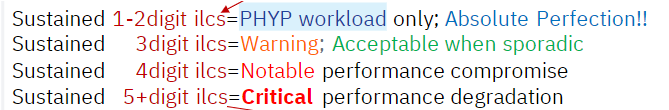

The guideline for performance impact is given in Earl Jew’s POWER VUG presentation ‘Simplest starting tactic for Power10 AIX exploitation V1.2‘:

Relieving the performance impact is done by increasing processor entitlement until the ilcs numbers drop.